In a startup company, we had a very common IT infrastructure scenario with a lot of EC2 instances, running multiple different services. In such a fleet of servers, we used Jenkins to run different tasks, like daily backups of various log files or periodical status checks. From the beginning, I was trying to solve a question:

How to connect to all these different instances, store ssh keys securely and update them in the laziest approach?

Then I found the AWS SSM (Systems Manager) and its sub-service Run Command. This solution surprised me with the simplicity and completely removed all my previous concerns about the original SSH approach.

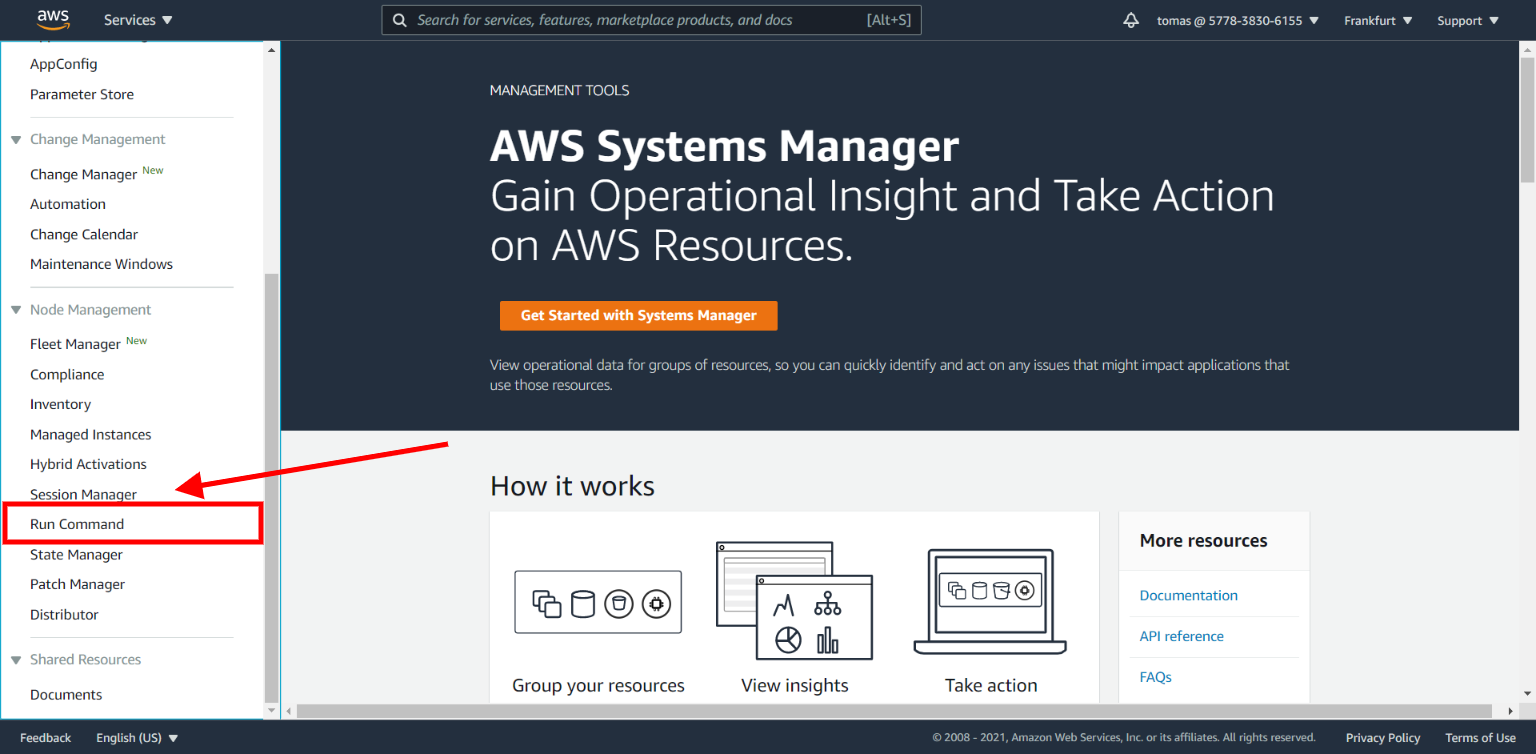

AWS Run Command

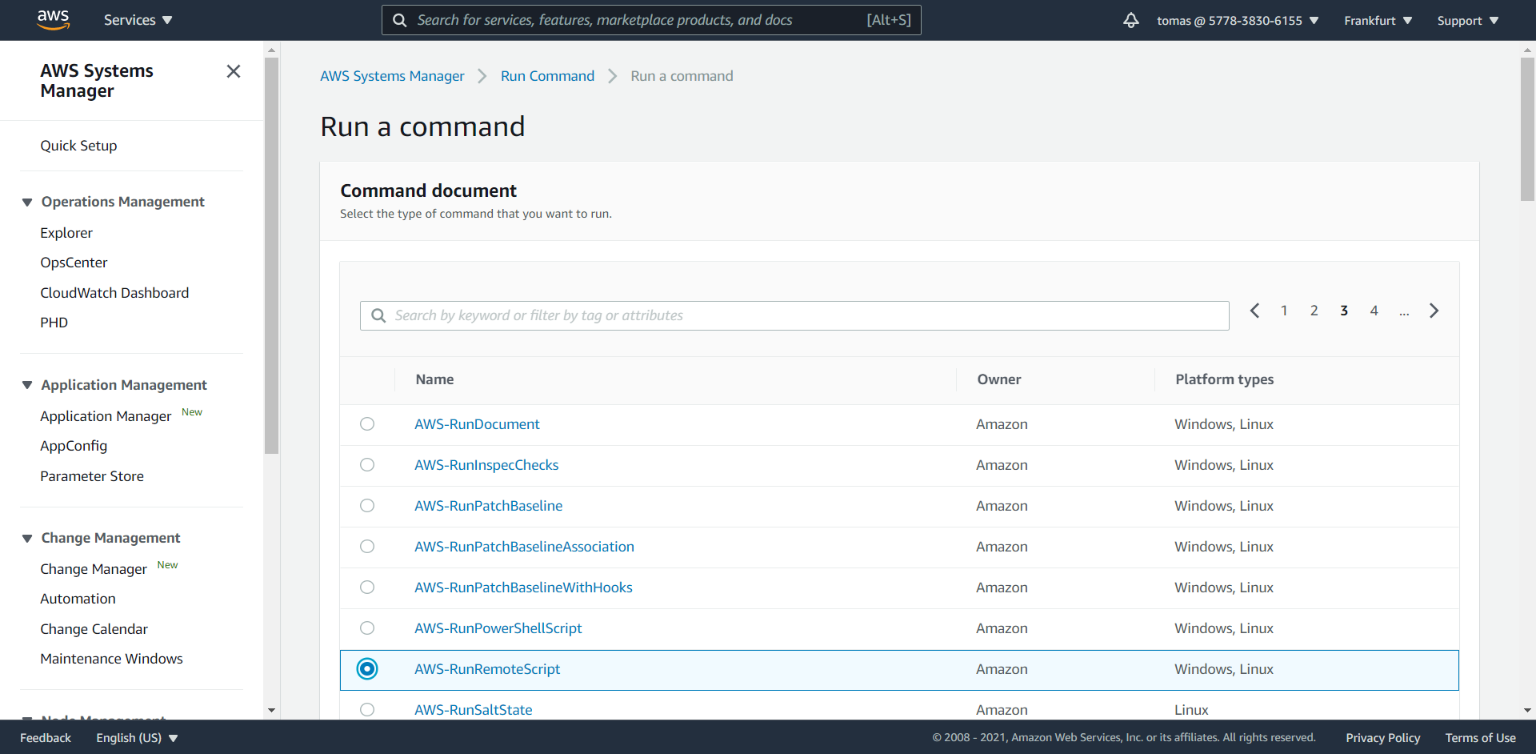

Run Command service helps you to execute your own file scripts and commands on EC2 instances in a secure way. This beautiful utility contains predefined templates to run a different sets of remote jobs for all OS platforms supported by AWS. We are looking for AWS-RunRemoteScript job - It helps us to run and execute script files with arguments.

Another option is to select AWS-RunShellScript, it executes job just with a single command.

How it works?

AWS Systems Manager uses Run Command as a task managing service for any EC instance. Its great ability is to invoke commands on-demand, on any number of instances, and see the output in the AWS Console or through the CLI.

Let’s demonstrate Run Command functionality with a simple scenario - Run and backup Nginx log files to S3 bucket. We’ll start with AWS Console.

Permissions and Prerequisities

Before we go straight to the command execution, we need to prepare a few important steps:

- Install SSM agent on EC2

- Create S3 buckets

- Add permissions

- Create backup.sh

Install SSM agent on EC2

Installation is quite simple and well documented in AWS Docs1. If you have Ubuntu instances, connect to the instance once and execute the following command:

sudo snap install amazon-ssm-agent --classic

SSM agent is managed with simple commands

# start

sudo systemctl start snap.amazon-ssm-agent.amazon-ssm-agent.service

# stop

sudo systemctl stop snap.amazon-ssm-agent.amazon-ssm-agent.service

# status

sudo systemctl status snap.amazon-ssm-agent.amazon-ssm-agent.service

Create S3 buckets

For our demonstration, we’ll need to have 3 different S3 buckets:

- s3://{namespace}-scripts/ - Bucket contains our backup.sh, which will be executed remotely on all instances.

- s3://{namespace}-scripts-logs/ - Every Run Command output will be stored here

- s3://{namespace}-backups/ - Bucket where Nginx logs will be archived

Of course, you are free to configure the buckets in your more desired way.

Add permissions

SSM Agent permissions

Permissions are added to the EC2 instances through the roles attached to them. Enter to IAM management, select the role of an instance where commands will be executed, and add the following policy: AmazonEC2RoleforSSM. Save it and instances will be able to be managed with AWS SSM in a few minutes.

For more details how to configure more strict permissions based on tags, check AWS Docs2

S3 bucket permissions

Because we need to download the script from the S3 bucket, the instance’s IAM role needs to have permissions to download (GetObject) from it. And because we want to store logs in the S3 bucket, the instance needs to have permissions to upload (PutObject). Add or update IAM policies with:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": "s3:GetObject",

"Resource": [

"arn:aws:s3:::{namespace}-scripts/*",

"arn:aws:s3:::{namespace}-scripts"

]

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::{namespace}-backups",

"arn:aws:s3:::{namespace}-backups/*"

]

}

]

}

Create backup.sh

Create backup.sh script with single argument ${BUCKET}. Add this file to S3 bucket s3://{namespace}-scripts/backup.sh, to be accessible by AWS SSM Run Command. By the way, for the testing, I recommend removing the clear_logs section first.

#!/bin/bash

BUCKET=$1

TIME=$(date +%H:%M:%S)

YEAR=$(date +%Y)

MONTH=$(date +%m)

DAY=$(date +%d)

S3_PATH=

get_instance_id(){

ec2metadata --instance-id

}

copy_logs(){

INSTANCE_ID=$(get_instance_id)

echo "Instance id: ${INSTANCE_ID}"

# new pattern: s3://<bucket>/<year>/<month>/<day>/<instanceid>/<datetime>/*.logs

S3_PATH="${BUCKET}"/"${YEAR}"/"${MONTH}"/"${DAY}"/"${INSTANCE_ID}"/"${TIME}"/

echo "S3 path: ${S3_PATH}"

if [ -d "/var/log/nginx/" ];

then

echo "Archiving /var/log/nginx"

aws s3 cp /var/log/nginx/ "${S3_PATH}" --exclude "*" \

--include "access.log.*.gz" \

--include "error.log.*.gz" \

--recursive

fi

}

clear_logs(){

echo "Clearing logs"

if [ -d "/var/log/nginx/" ];

then

rm -rf /var/log/nginx/access.log.*.gz

rm -rf /var/log/nginx/error.log.*.gz

fi

}

logs(){

echo "Running backup script"

copy_logs

clear_logs

}

logs

Run Command Setup

Let’s configure Run Command to execute the remote backup.sh script.

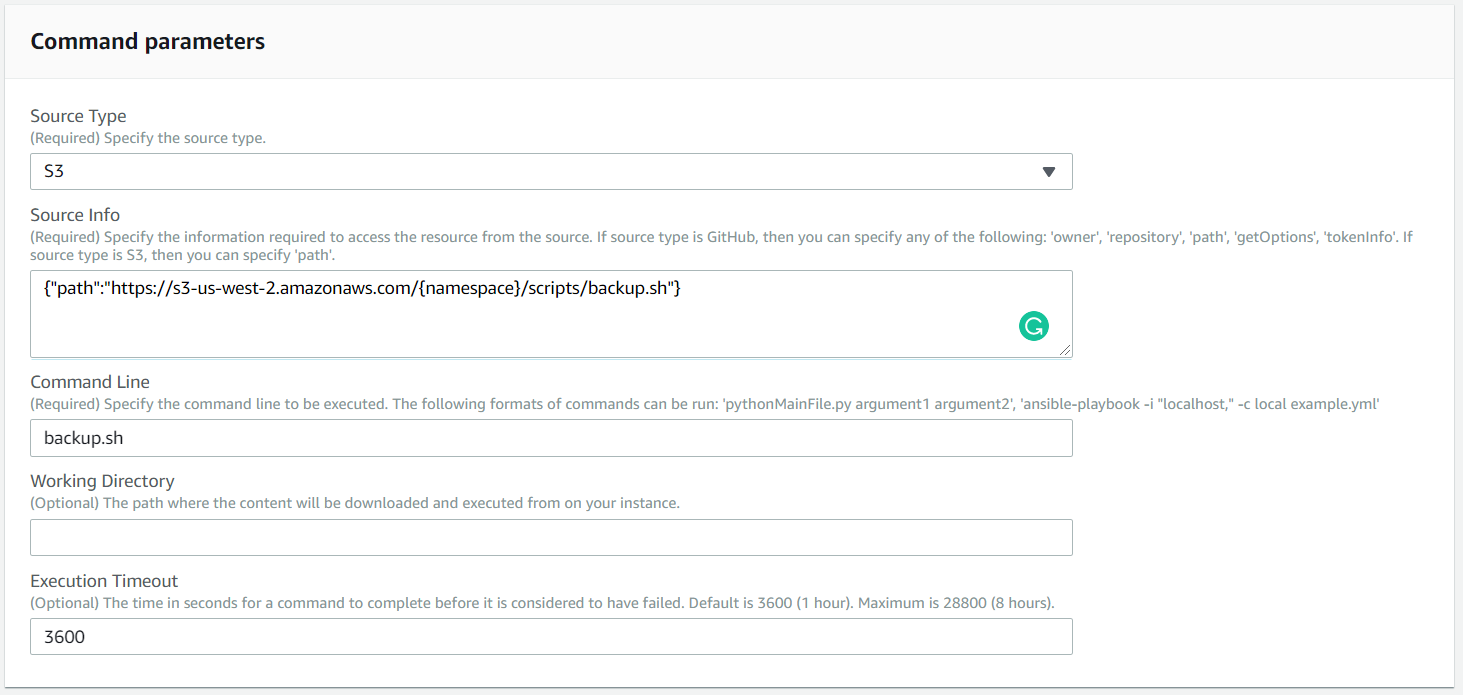

1. Command Parameters

The default configuration of the script location and command arguments to be executed with.

Source Type - Define where our backup.sh file is located. Select S3

Source Info - JSON configuration, define path property and full S3 https endpoint - https://s3-us-west-2.amazonaws.com/{namespace}/scripts/backup.sh

Command Line - Enter the script invocation command with arguments backup.sh <argument>

Execution Timeout - The total execution timeout value of script in seconds.

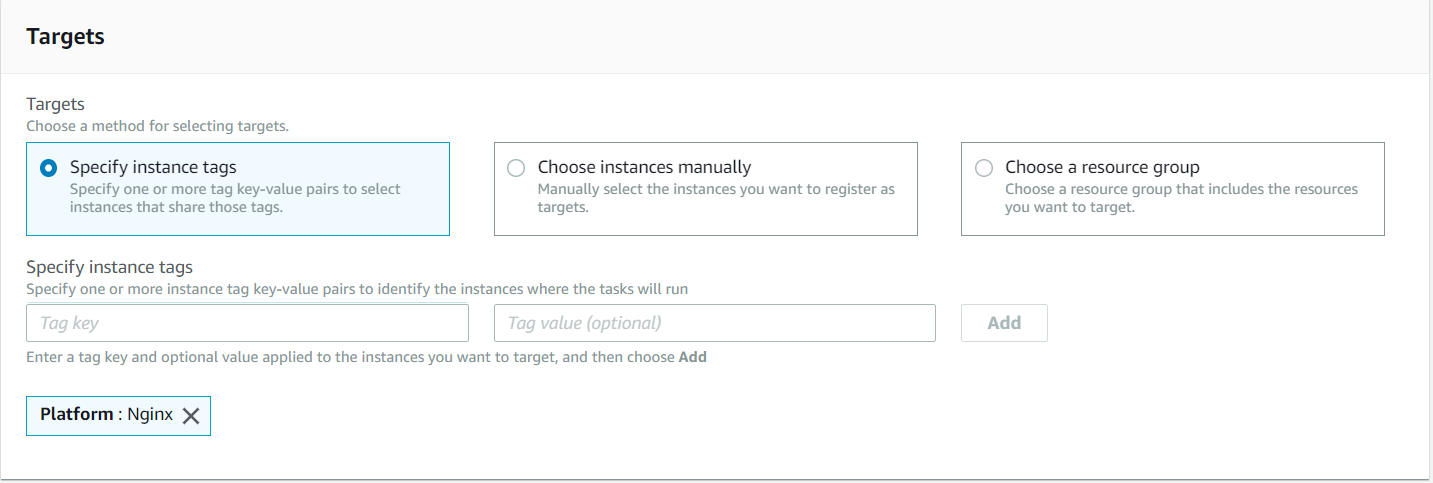

2. Targets

This is the way to find the instances. There are multiple options - Find by tags, Choose instances manually and Choose resource group. In our environment, the most convenient way is to use Tags, so we can increase/decrease the number of instances without the worry of reconfiguration of Run Command. We can add any number of Tags to identify EC2 instances.

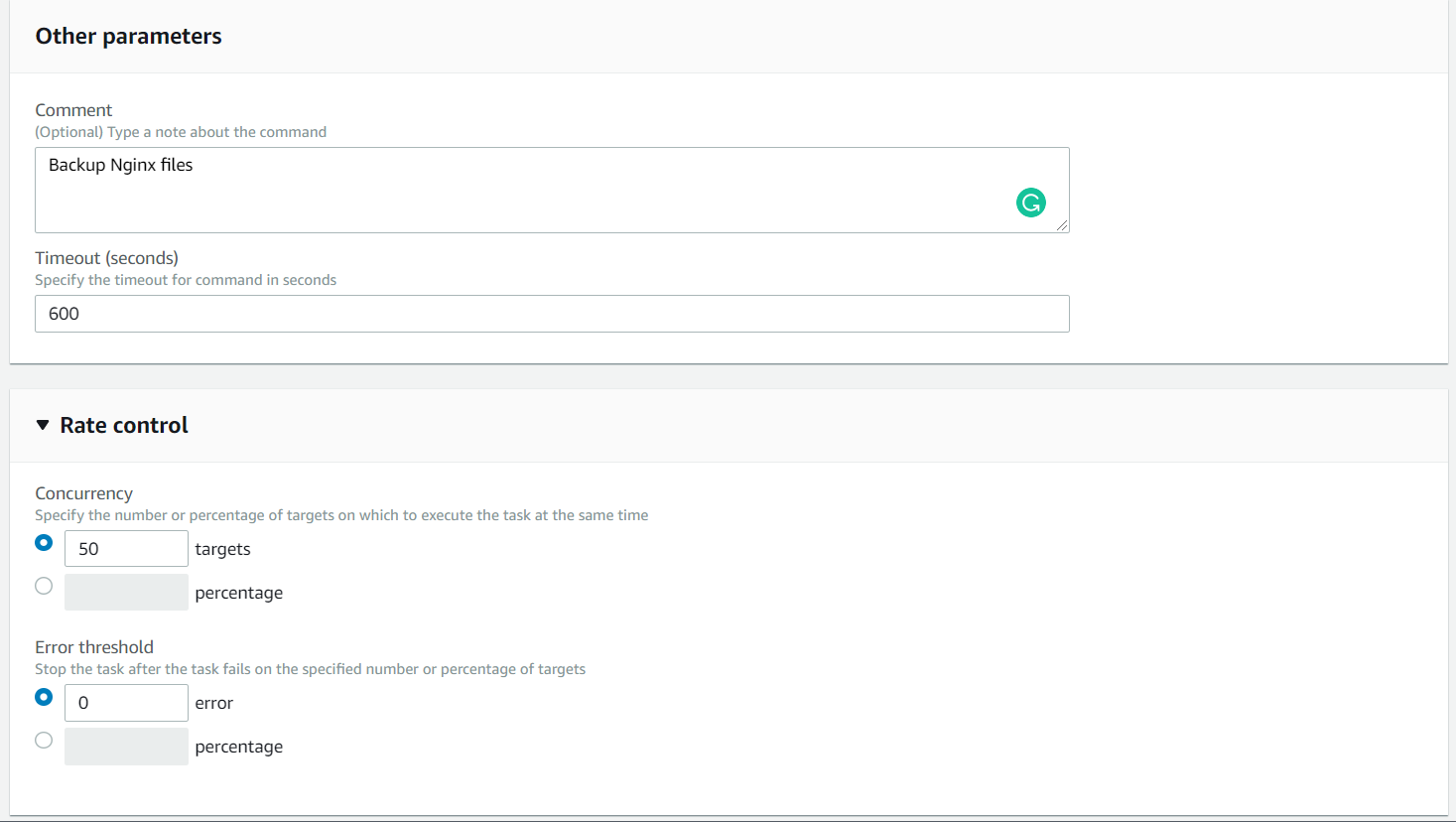

3. Other Parameters and Rate control

Specify additional parameters of the script behavior

Comment - A useful parameter can help us to find the script output in history.

Timeout - Max timeout of a single command. Value in seconds.

Concurrency - On how many instances the script should be executed at the same time.

Error treshold - Number/percentage of allowed errors.

4. CLI output

The most beautiful thing about specifying all these parameters is the generated CLI output. By getting the hands on the syntax, we can very fast create automated scripts with aws ssm send-command utility from any location, if we have correct permissions to do so.

Sample CLI output

aws ssm send-command --document-name "AWS-RunRemoteScript" --document-version "1" --targets '[{"Key":"tag:App","Values":["Nginx"]}]' --parameters '{"sourceType":["S3"],"sourceInfo":["{\"path\":\"https://s3-us-west-2.amazonaws.com/{namespace}-scripts/backup.sh\"}"],"commandLine":["backup.sh"],"workingDirectory":[""],"executionTimeout":["3600"]}' --comment "Backup Nginx logs" --timeout-seconds 600 --max-concurrency "50" --max-errors "0" --output-s3-bucket-name "{namespace}-scripts-logs" --output-s3-key-prefix "Nginx"

Note: S3 bucket values must be completed, to work

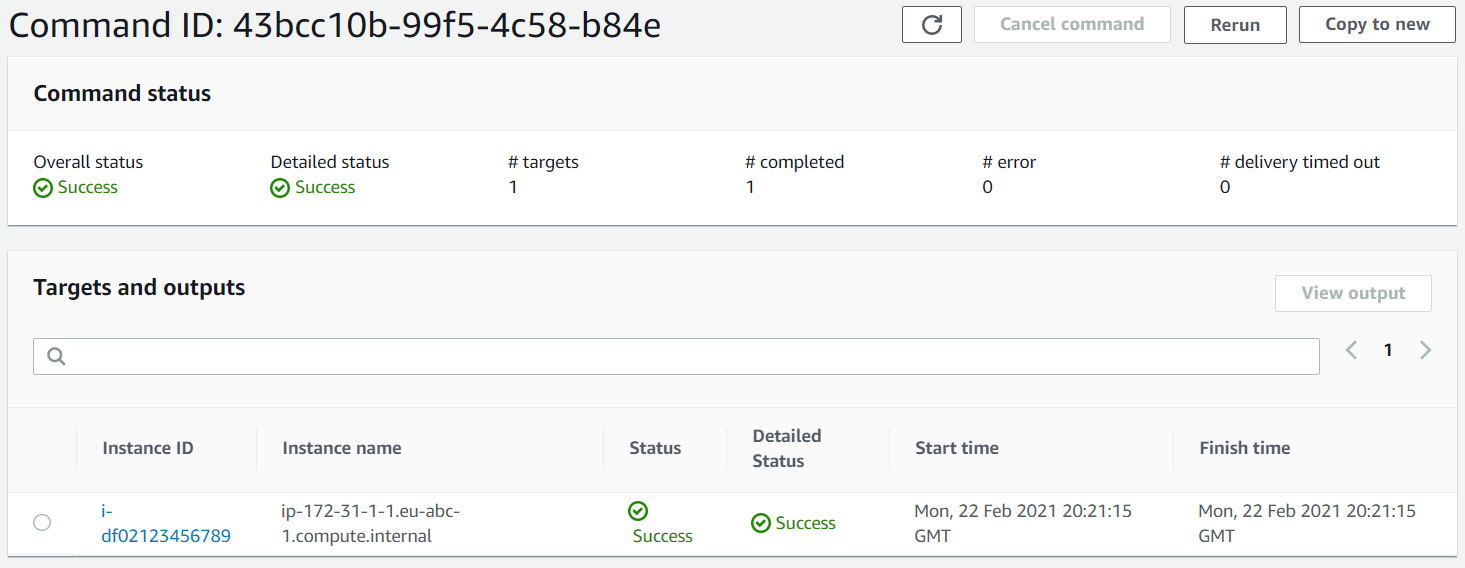

Run Command Execution

If we run AWS Run Command with CLI or Console, the task status output is available through the Run Command History tab. There we can see important metrics of the command, including how many targets the command was executed. There are two important variables to identify command - Command ID and Instance ID. Together with Command description and Command parameters, we have a complete overview of the command execution. If we specified the output to S3 bucket, we are able to see all the stdout & stderr outputs in it.

Troubleshooting

It might happen, the commands will not run for some reason, and because of the serverless approach, it can be very confusing to identify the reasons. My recommendation is to double-check the S3 policies attached to the instance role. But for more details you can always check SSM logs in the EC2 itself:

/var/log/amazon/ssm/amazon-ssm-agent.log

/var/log/amazon/ssm/errors.log

/var/log/amazon/ssm/audits/amazon-ssm-agent-audit-YYYY-MM-DD

Advance usage

The AWS Console can give us a nice overview of the CLI interface. Together with the other AWS CLI commands, we can build pretty nice tools to execute commands remotely and view the live progress. I created the utility remote_script.sh, to execute tasks wrapped as a .sh scripts.

Here is my github gist and you’re welcome to try it!

Example

./remote_script.sh \

--tagName "App" \

--tagValue "Nginx" \

--scriptFile "backup.sh" \

--command "backup.sh" \

--comment "Backup: Nginx logs" \

--timeout 10

Following permissions are required:

- aws ec2 describe-instances

- aws ssm send-command

- aws ssm list-command-invocations

- ssm get-command-invocation

Userful links

Docs to read and tools to use:

- My Remote-script.sh bash implementation of AWS Run Command

- AWS article Remotely Run commands on an EC2 Instance

- Install SSM Agent on EC2 Manually install SSM Agent on EC2 instances for Linux

- Permissions for Run Command Setting up Run Command